GPU Kernels

In Julia, GPU usage is already optimized for many processes through CUDA.jl. Simply by applying a function to a CuArray, operations (e.g. broadcasting and map-reducing) are executed on GPU-specialized code. Additionally, more complex tasks, such as operations in machine learning algorithms like self-attention, have optimized code through cuDNN.jl.

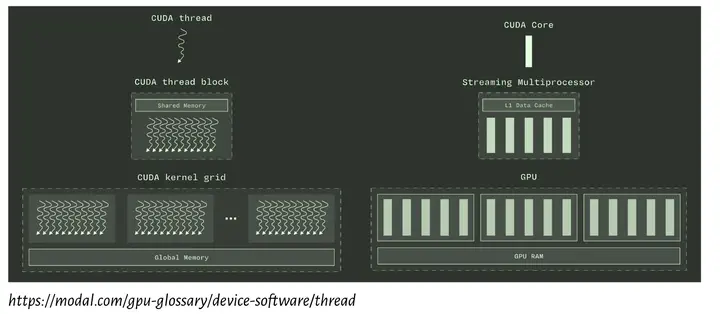

These are done through GPU kernels, which implement functions that exploit the CUDA architecture of GPUs.

Today, we will show how to write such kernels, for when already-optimized kernels do not already exist.

Downloadable contents

Environement files: manifest | project

Julia scripts: demo sandbox | softmax examples